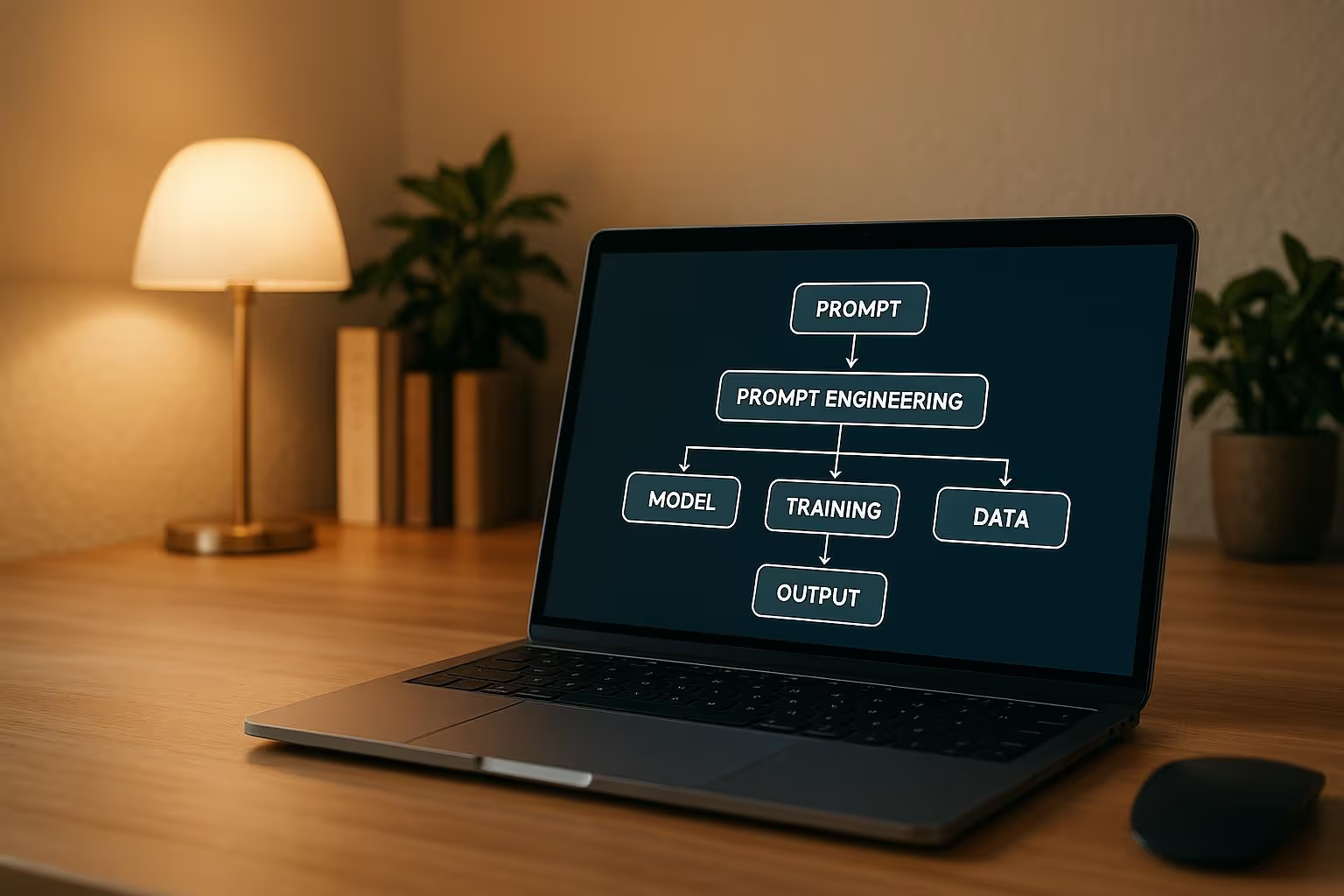

Prompt engineering is the backbone of effective AI workflows, influencing automation, content generation, and data analysis. Poorly designed prompts can lead to inefficiencies, while well-crafted ones enhance performance and reduce costs. This article explores the top tools for prompt engineering, focusing on their strengths, features, and enterprise use cases.

| Tool | Multi-Model Support | Key Features | Enterprise Focus |

|---|---|---|---|

| Prompts.ai | 35+ models (GPT-5, Claude) | Cost tracking, version control | Governance, compliance, cost savings |

| LangChain | OpenAI, Cohere, Anthropic | Workflow chains, memory tools | Limited enterprise features |

| PromptLayer | OpenAI, Anthropic, Cohere | Prompt tracking, REST API | Basic team management |

| Agenta | GPT-3.5-turbo, others | Custom workflows, fine-tuning | Collaboration tools |

| OpenPrompt | Hugging Face models | Dataset testing, Python-based | Academic licensing |

| Prompt Engine | Limited | Simple API integration | Minimal enterprise support |

| PromptPerfect | GPT, Claude, Midjourney | Optimization tools | Browser extensions |

| LangSmith | Model-agnostic | Self-hosting, Kubernetes | Enterprise-grade features |

These tools cater to a range of needs, from cost management to research and development. Whether you're scaling enterprise AI or refining academic workflows, the right platform depends on your goals, infrastructure, and budget.

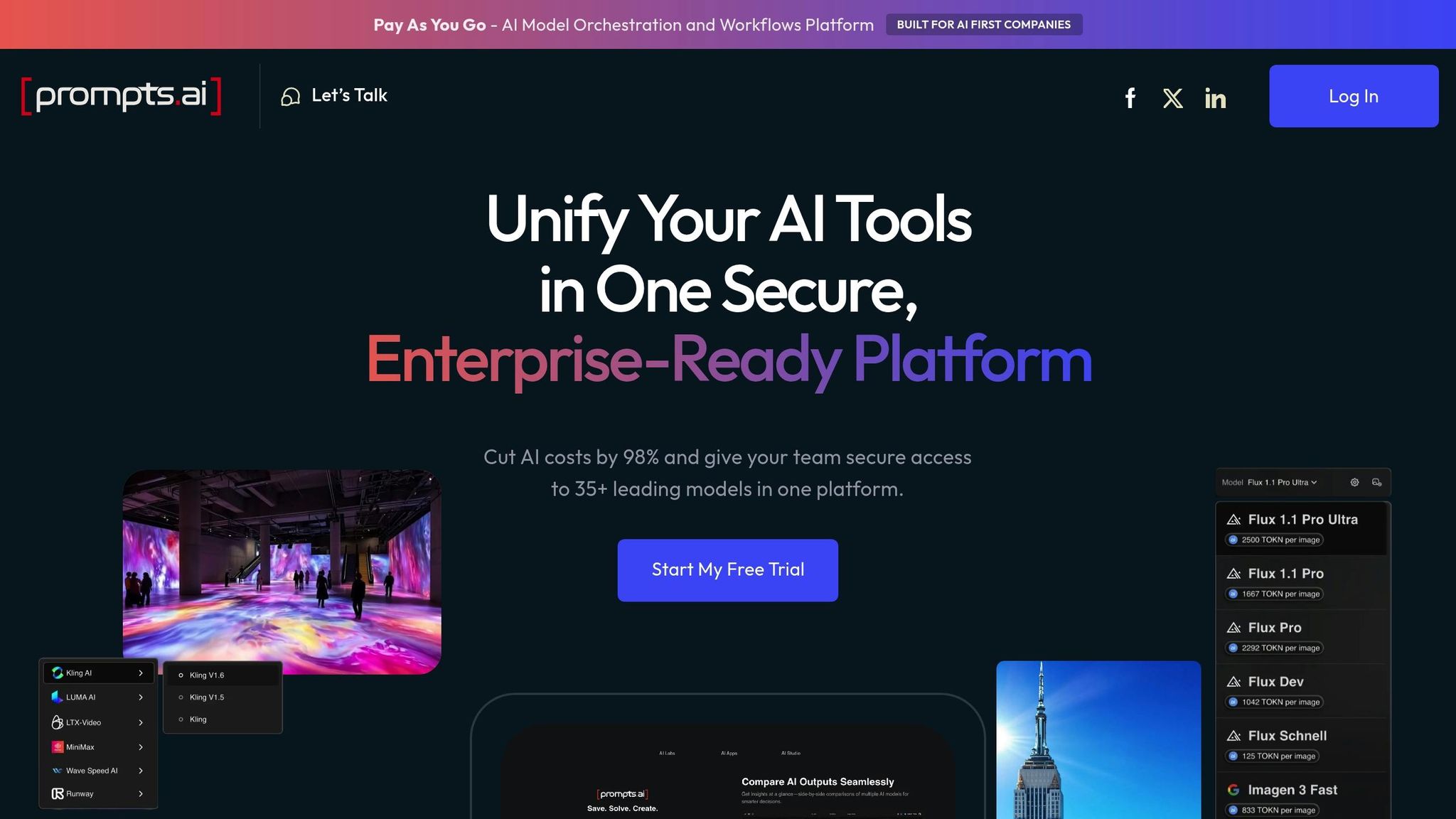

Prompts.ai tackles the challenges of tool sprawl and high costs by bringing over 35 leading AI models into a single, secure platform. This enterprise-grade solution simplifies operations by eliminating the need for multiple subscriptions and scattered workflows, offering a streamlined approach to AI orchestration.

Prompts.ai provides seamless access to 35 top models, including GPT-5, Grok-4, Claude, Flux Pro, and Kling. Teams can experiment freely without juggling multiple accounts, making it an ideal tool for comparative analyses or A/B testing across models. Switching between models is effortless, all within the same prompt engineering environment, which significantly boosts workflow efficiency.

The platform includes advanced version control, allowing users to track prompt iterations, compare performance across models, and maintain detailed audit trails. Teams can directly compare the outcomes of different models and prompt variations, optimizing for specific goals with precision.

Prompts.ai’s testing framework introduces a structured approach to prompt evaluation. Organizations can establish benchmarks, measure improvements, and shift from ad-hoc experimentation to scalable, repeatable processes. This ensures prompt development is both efficient and compliant, supporting consistent results across departments.

Prompts.ai is designed with enterprise needs in mind, offering comprehensive governance tools, real-time visibility, and cost control features. The platform provides full transparency into AI usage, tracking every token and its associated costs across teams and use cases.

A standout feature is its ability to reduce AI software costs by up to 98% through a pay-as-you-go TOKN credit system. By eliminating recurring subscription fees, costs are tied directly to actual usage. The built-in FinOps layer further enhances cost management by offering real-time tracking and optimization recommendations, ensuring AI investments align with business goals.

| Feature | Prompts.ai Capability |

|---|---|

| Transparency | High - Real-time usage visibility, audit trails |

| Compliance | High - Enterprise-grade governance controls |

| Security | High - Enterprise-level data protection |

| Scalability | Excellent - Cloud-native, pay-per-use scaling |

This combination of governance and cost control integrates smoothly with existing workflows, ensuring efficiency without sacrificing oversight.

Prompts.ai supports end-to-end workflow automation, allowing teams to embed prompt engineering into their existing systems seamlessly. Its integration capabilities ensure that new AI tools complement, rather than disrupt, established processes.

The platform also encourages collaboration through its community features and Prompt Engineer Certification program. Organizations can benefit from expert-designed "Time Savers" and connect with a global network of prompt engineers. This approach not only builds internal expertise but also taps into the collective knowledge of the broader AI community, making adoption smoother and more impactful for enterprise workflows.

LangChain is an open-source framework designed to help developers build AI workflows ranging from simple chatbots to intricate, multi-step reasoning systems.

LangChain's architecture is built to support a wide range of language model providers, including OpenAI, Anthropic, Cohere, and Hugging Face. Its modular and provider-agnostic design allows teams to switch between models effortlessly. For example, you can use GPT-4 for complex tasks while relying on faster, more cost-effective models for simpler operations. This flexibility ensures that performance and expenses are balanced across various AI workflows.

LangChain goes beyond model support by simplifying AI integration. It offers pre-built connections to popular databases, APIs, and document storage systems, enabling developers to create applications that access real-time data and perform a variety of tasks.

The framework's chain concept is a standout feature, allowing developers to link multiple AI processes together. This means the output of one model can seamlessly become the input for another, making it ideal for tasks like document analysis. For instance, a workflow might extract data, summarize it, and then use that summary to generate tailored responses.

Additionally, LangChain includes advanced memory management tools, enabling AI applications to retain context across conversations or sessions. This capability is crucial for creating chatbots and virtual assistants that can reference prior interactions, delivering a more coherent and personalized user experience.

To further enhance the development process, LangChain provides a comprehensive ecosystem of tools, including prompt templates, output parsers, and evaluation metrics. These resources make it easier for developers to move from concept to deployment, cutting development timelines from months to weeks. Whether using community-contributed tools or building custom modules, developers can integrate seamlessly within LangChain's flexible framework.

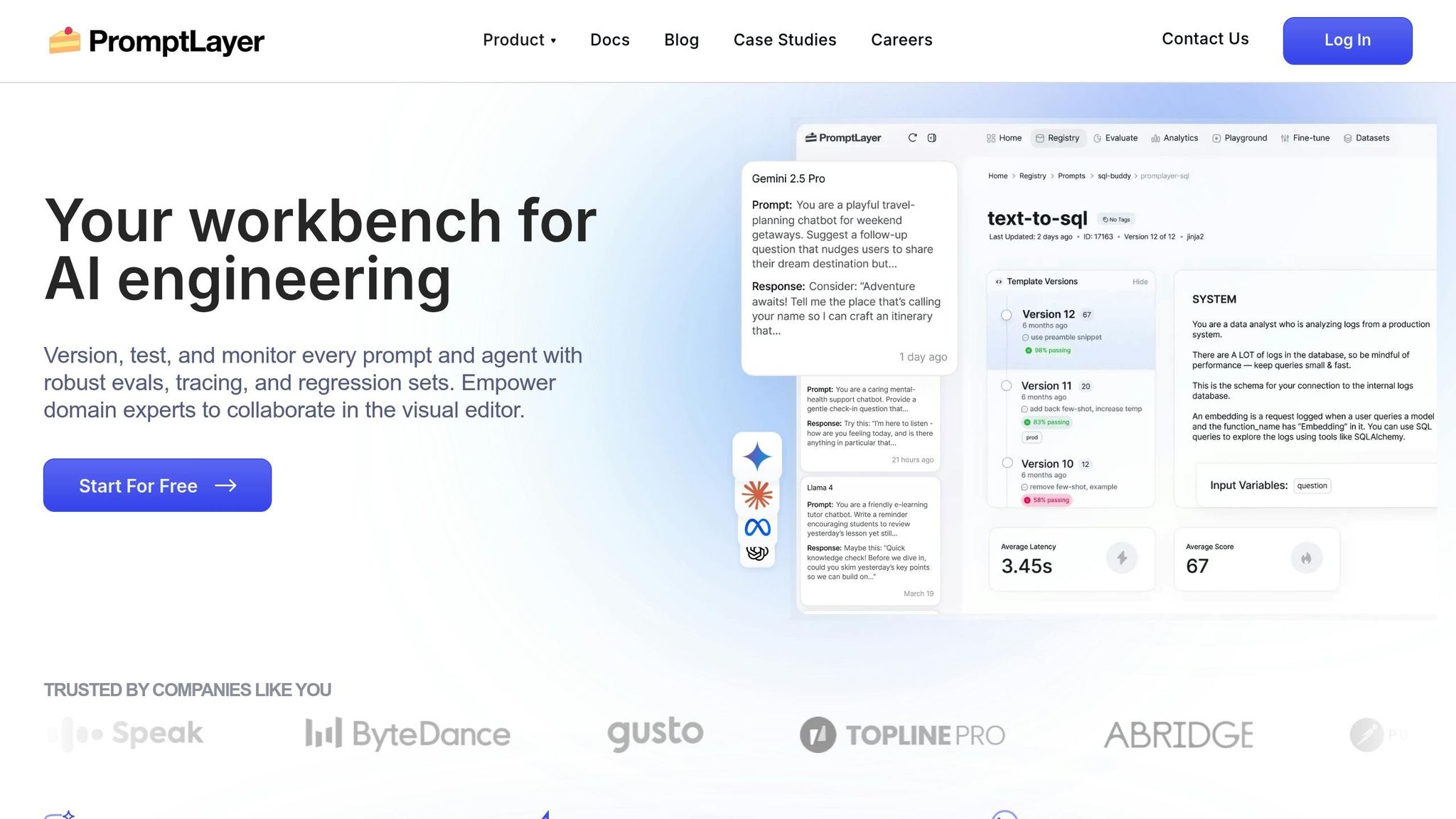

PromptLayer is designed to simplify prompt engineering in AI workflows. It keeps track of prompt iterations and monitors their performance, ensuring that your prompt strategies stay effective and meet your organization's goals. By focusing on these key aspects, it lays the groundwork for further improvements outlined below.

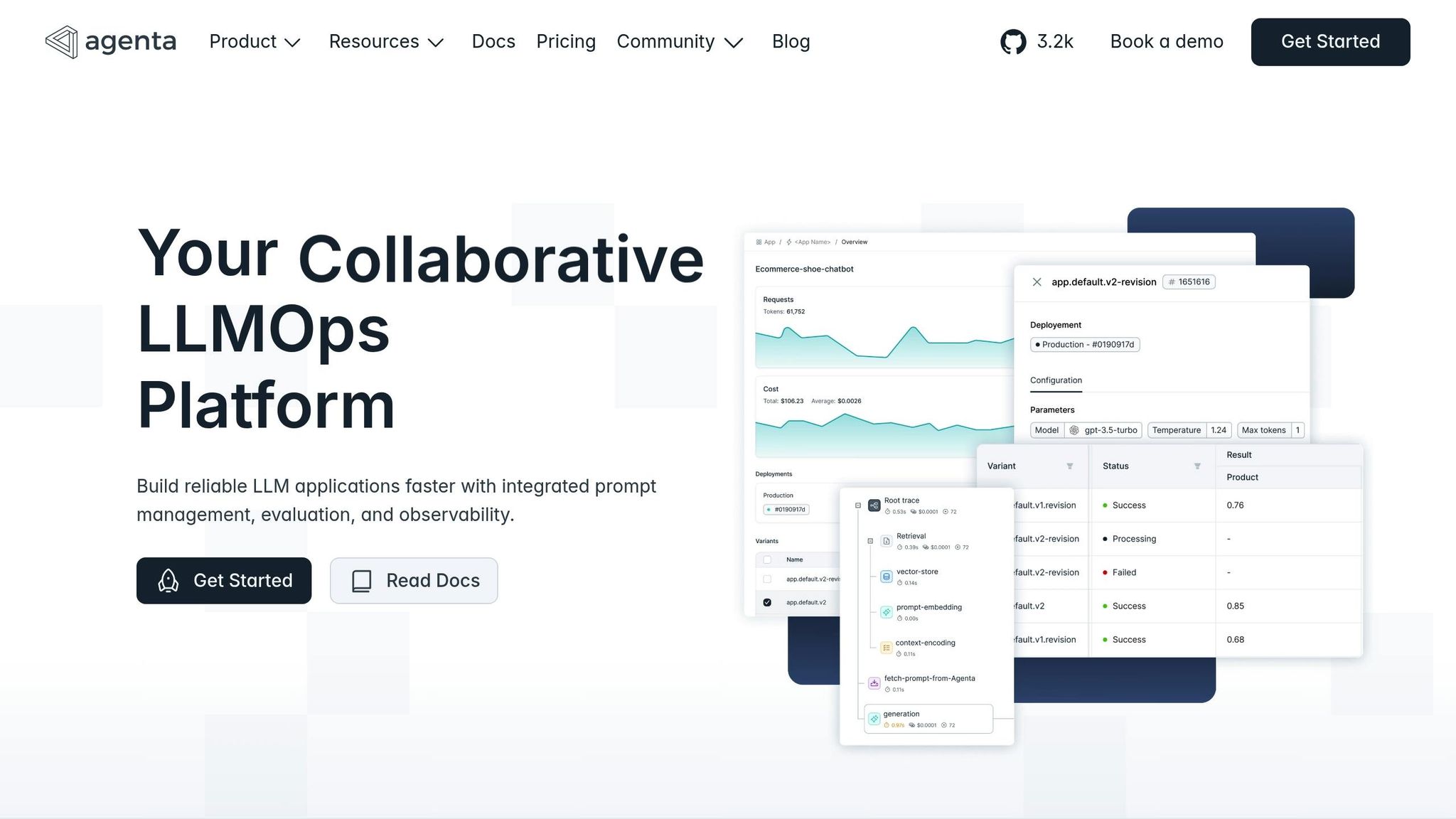

Agenta brings prompt engineering into harmony with existing AI systems, offering a seamless way to work with advanced models like OpenAI's GPT-3.5-turbo. Designed with flexibility in mind, the platform lets teams experiment across multiple models to handle a variety of tasks. Its architecture supports robust multi-model functionality, making it a versatile tool for diverse AI needs.

Agenta stands out for its ability to integrate various large language models, enabling a wide range of applications. Whether you're focusing on text summarization, content creation, or more intricate workflows, Agenta ensures you’re not tied to a single provider. This adaptability allows users to tailor their AI solutions to specific requirements.

Agenta simplifies the process of connecting with existing systems, making it easier than ever to enhance your AI workflows. A key highlight is its Custom Workflows feature, introduced in April 2025, which allows developers to link their language model applications to the platform with minimal coding effort. For example, an app using OpenAI's GPT-3.5-turbo for tasks like summarizing articles or creating tweets can be integrated effortlessly through a straightforward configuration using the Python SDK.

The platform also auto-generates an OpenAPI schema and provides an interactive user interface, making integration even smoother. To further optimize workflows, Agenta enables fine-tuning of parameters such as embedding models, top-K values, and reasoning steps, ensuring maximum efficiency for your AI-powered projects.

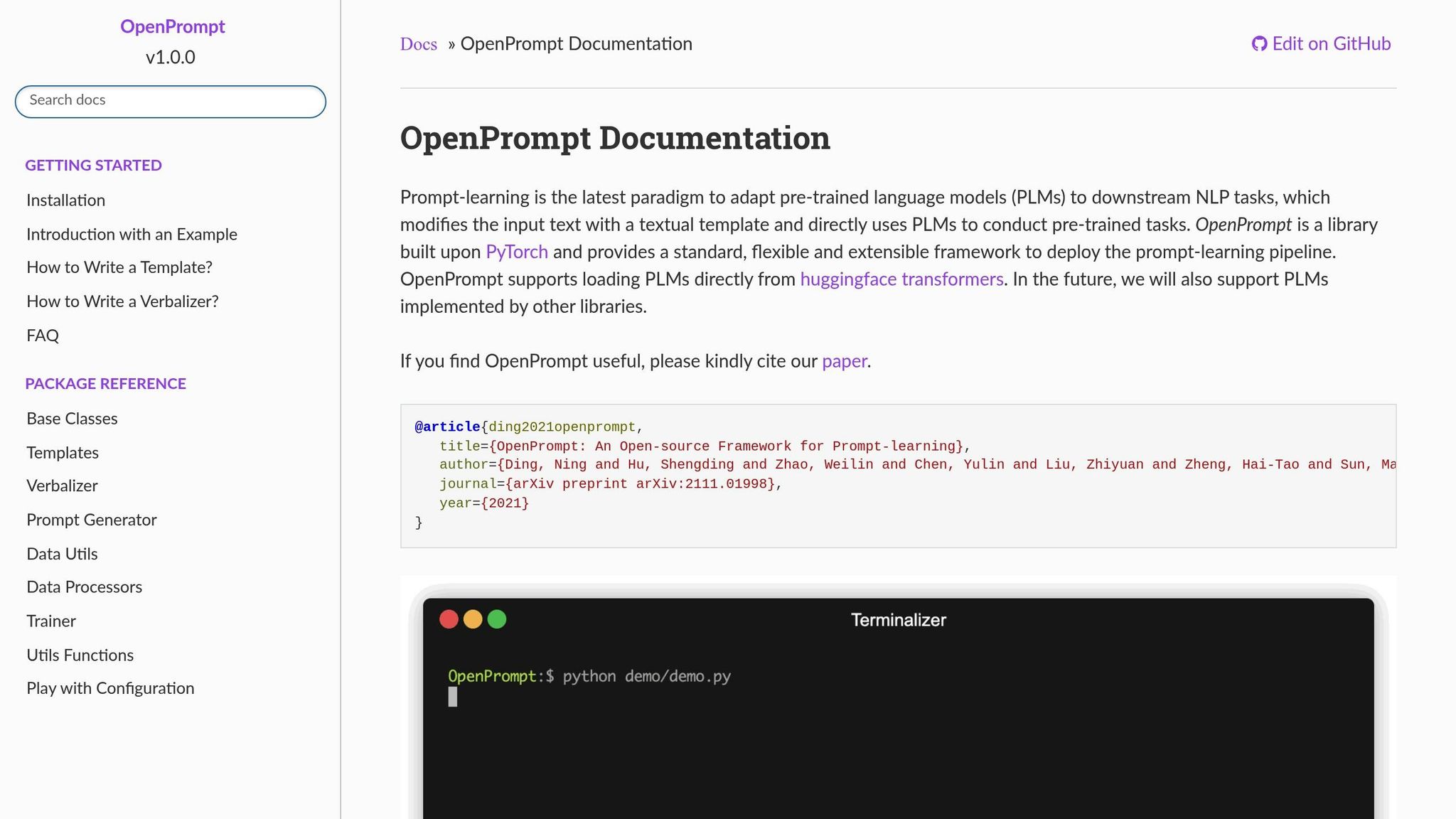

OpenPrompt combines the adaptability of open-source tools with seamless integration into existing machine learning workflows. Built on Python, this framework focuses on datasets and testing rather than intricate prompt chaining, offering teams precise control over their prompt-learning processes.

OpenPrompt works effortlessly with Pre-trained Language Models (PLMs) from Hugging Face's Transformers library. By tapping into this expansive ecosystem, users can choose the most suitable model for their specific natural language processing (NLP) needs. Its architecture allows for quick and efficient model experimentation, enabling teams to refine their approaches without unnecessary delays.

One example of its application is in recommendation systems, where OpenPrompt demonstrated its utility in practical, high-demand scenarios. This flexibility and support for a wide variety of models create a solid foundation for iterative testing and continuous improvement.

The framework's standout feature is its focus on datasets and testing, moving away from traditional prompt chaining. OpenPrompt enables iterative experimentation, recognizing that the effectiveness of prompts hinges on thoughtful design and rigorous testing. While it doesn’t include built-in version control, it excels at testing prompt performance across diverse use cases.

To simplify the workflow, OpenPrompt offers the PromptDataLoader, which merges a Tokenizer, Template, and TokenizerWrapper into a single, cohesive process. This streamlined approach not only speeds up data preparation but also ensures models are production-ready while remaining adaptable for ongoing refinement and testing.

Designed with interoperability in mind, OpenPrompt integrates smoothly into Python-based machine learning environments, enhancing existing workflows rather than overhauling them. Its compatibility with Hugging Face's ecosystem allows teams to utilize pre-existing model repositories and deployment pipelines, minimizing disruptions to established systems.

This integration-first philosophy makes OpenPrompt an appealing choice for organizations that have already invested in Python-based ML infrastructure. By adding advanced prompt engineering capabilities without requiring major changes, the platform aligns perfectly with the needs of modern AI workflows, emphasizing efficiency and adaptability. OpenPrompt continues to champion the idea of seamless, interoperable tools for advanced prompt engineering.

The Prompt Engine simplifies the management of prompt workflows, making it easier for developers and teams to handle their tasks efficiently. While detailed public documentation on its multi-model support and integration features is still sparse, teams should stay tuned for updates as more performance data becomes available. Upcoming developments are expected to clarify how the Prompt Engine will fit seamlessly into the ever-changing AI landscape.

PromptPerfect simplifies the art of prompt engineering by honing in on the key features that enhance workflow efficiency. Instead of overwhelming users with extensive multi-model capabilities or complex integrations, it prioritizes practical functionality. This focused design makes PromptPerfect a smart and efficient tool for navigating the ever-changing world of AI workflows.

LangSmith works seamlessly with or without LangChain, offering compatibility with any large language model (LLM) application. Its design is crafted to fit into a variety of tech stacks and environments, making it a versatile choice for developers and enterprises alike.

With its model-agnostic approach, LangSmith empowers teams to select the most suitable LLM for their needs, without being tied to a specific vendor. This flexibility ensures that users can adapt to evolving requirements and take advantage of the best tools available.

For enterprises prioritizing data security and compliance, LangSmith offers self-hosting options. Organizations can deploy the platform on private Kubernetes clusters, retaining complete control over sensitive data. This setup is ideal for businesses with strict security protocols or regulatory requirements.

LangSmith is built to integrate effortlessly with external tools and platforms. It supports TensorFlow and Kubernetes, works with leading cloud providers like AWS, GCP, and Azure, and accommodates hybrid and on-premises deployments. For DevOps teams, LangSmith also supports logging traces using standard OpenTelemetry clients, ensuring smooth monitoring and troubleshooting.

Take a look at the table below to compare key workflow capabilities across different platforms:

| Tool | Multi-Model Support | Version Control | Enterprise Features | Integration Capabilities |

|---|---|---|---|---|

| Prompts.ai | 35+ models including GPT-5, Grok-4, Claude, LLaMA, and Gemini | Integrated version management | Enterprise governance with audit trails and real-time cost controls (up to 98% cost reduction) | Integrations with major cloud providers (AWS, GCP, Azure) and pay-as-you-go TOKN credit system |

| LangChain | Model-agnostic framework | Git-based version control | Limited enterprise features | Extensive third-party integrations with Python and JavaScript SDKs |

| PromptLayer | Supports providers such as OpenAI, Anthropic, and Cohere | Tracks prompt history | Basic team management | REST API and webhook support |

| Agenta | Connects with multiple LLM providers | Git integration for prompts | Collaboration tools for teams | Docker deployment along with API endpoints |

| OpenPrompt | Focused on research-oriented models | Manual versioning | Academic licensing options | Integration with PyTorch and other research frameworks |

| Prompt Engine | More limited range of model selections | Basic version tracking | Minimal enterprise support | Simple API integration |

| PromptPerfect | Primarily supports GPT, Claude, and Midjourney | Maintains optimization history | Plans for individuals and teams | Browser extensions and API access |

| LangSmith | Model-agnostic approach | Comprehensive version control | Enterprise-grade features | Multi-cloud environment integrations |

Some platforms cater to developers with advanced versioning tools, while others focus on academic use or small teams. For businesses prioritizing cost efficiency, governance, or scalability, certain platforms like Prompts.ai stand out with features like integrated cost controls and extensive model support. Choose the platform that aligns best with your specific needs, whether that’s reducing costs, managing workflows, or speeding up development processes.

Choosing the right tools for prompt engineering is a balancing act that depends on your technical needs, scalability goals, integration requirements, and budget constraints. Each platform brings its own strengths, tailored to specific organizational priorities and workflows.

For enterprises aiming to streamline cost management and improve governance, platforms like Prompts.ai offer real-time controls and detailed audit trails. If flexibility in development is your priority, framework-based solutions such as LangChain might be a better fit. Meanwhile, teams focused on research-driven projects could find specialized tools like OpenPrompt more aligned with their goals.

Your decision should not only address current needs but also anticipate future growth. Look for platforms that provide robust API support, cloud compatibility, and SDK integrations to ensure smooth implementation. Some tools excel in offering access to advanced models, while others focus on specific providers or research applications. Whether you need straightforward REST API connectivity or more intricate multi-cloud integrations, your choice should reflect your infrastructure and operational demands.

In some cases, combining multiple tools can be a smart strategy. For example, a research team might rely on OpenPrompt for academic exploration, while production workflows could benefit from enterprise-grade platforms that emphasize governance and compliance. The goal is to integrate these tools without creating data silos or introducing inefficiencies.

When considering budget, think beyond the upfront costs. Factor in ongoing operational expenses, scaling fees, and potential hidden charges. Flexible pricing models, such as pay-as-you-go systems like TOKN credits, can help organizations with varying AI usage patterns maintain predictable costs compared to traditional subscription plans.

Finally, aligning tools with your team's expertise and standardizing workflows is essential. The best prompt engineering strategy combines the right technology with proper team training and process alignment. Look for platforms that not only meet your technical requirements but also support team development with thorough documentation, active communities, and training resources.

When choosing a prompt engineering tool for your AI workflows, focus on solutions that seamlessly integrate with your current systems and enable interoperable workflows. Look for tools that provide structured support for prompts, work well with a variety of AI models, and include features for testing and refining prompts to maintain accuracy and efficiency.

You should also evaluate the tool's scalability to meet your enterprise's growing demands and its usability for your team. A thoughtfully designed tool can simplify processes, boost productivity, and enhance the performance of your AI-powered systems.

Prompt engineering tools streamline AI workflows by making it easier to create, test, and deploy prompts. This not only saves resources but also accelerates development timelines. By refining prompts, organizations can achieve more accurate responses, improved scalability, and enhanced performance - all while cutting operational costs and delivering quicker outcomes.

For example, pay-per-use credit models allow businesses to pay solely for what they use, offering a practical way to reduce expenses. Moreover, effective prompt management minimizes delays and simplifies processes, boosting the efficiency and cost-effectiveness of AI applications.

Prompt engineering tools make it easier to work with AI systems by providing features for designing, testing, and deploying prompts directly into existing workflows. Many of these tools come with low-code or no-code interfaces, allowing users to integrate prompts into AI-driven applications while including options like conditional logic and adjustments tailored to specific models.

By simplifying how prompts are managed and incorporated, these tools boost the efficiency of AI workflows and improve how well systems work together. They play a key role in embedding AI into enterprise operations, ensuring that AI systems actively support decision-making and help achieve operational objectives.